Continuous iteration and improvement is core for precise anomaly detection. With Validio 7.0, we’re taking another step toward making data quality monitoring smarter and more adaptive.

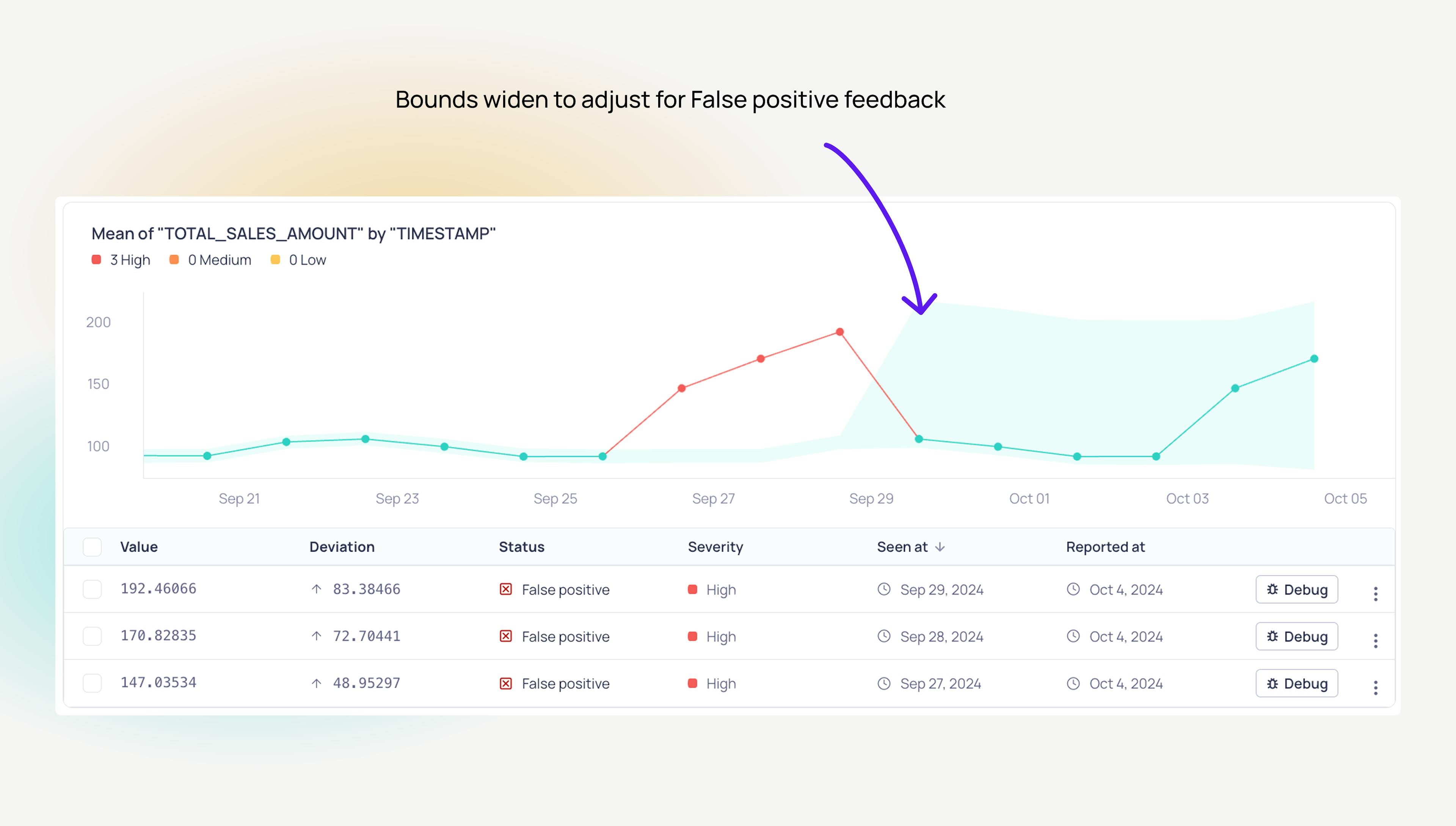

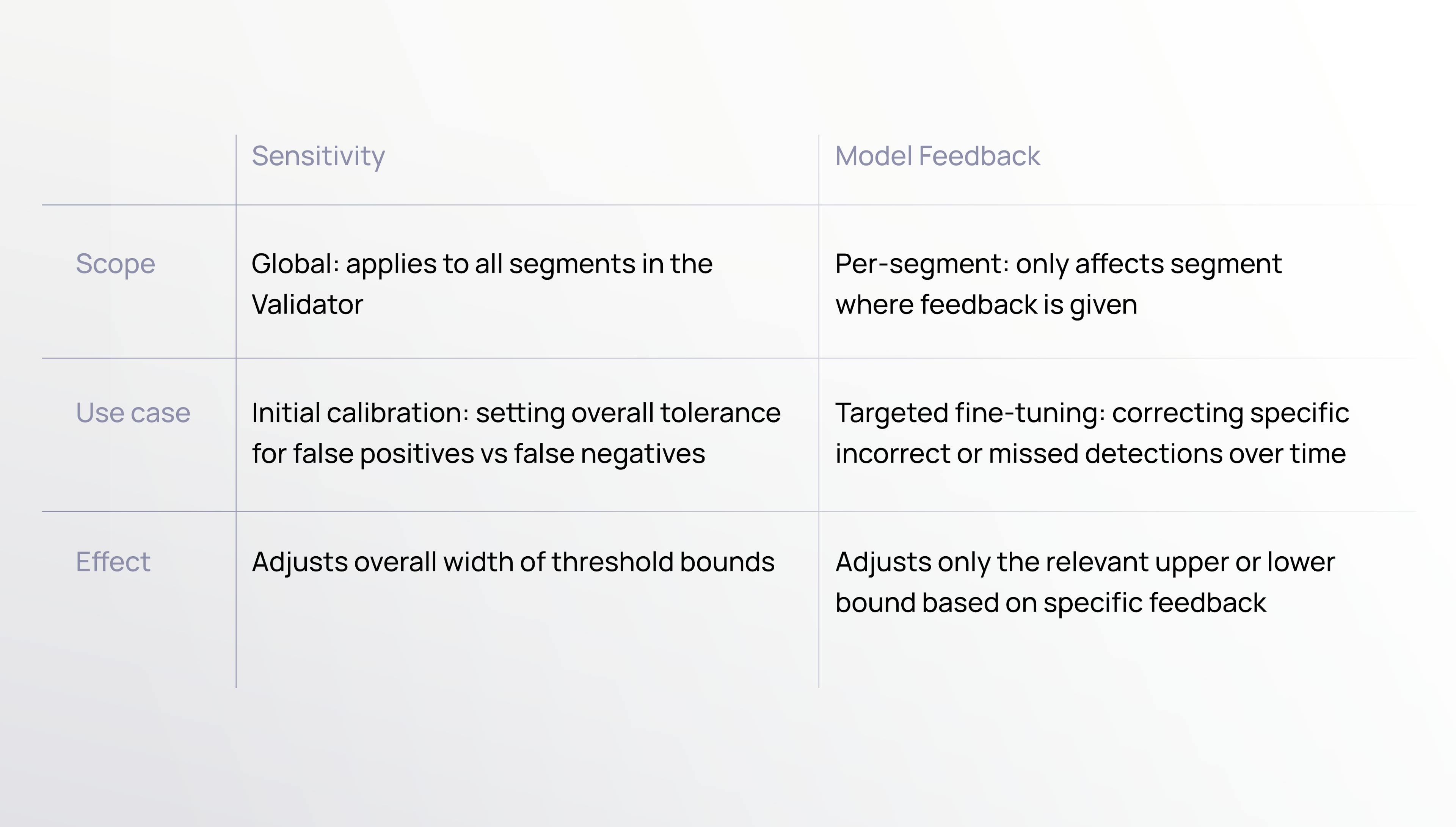

This release introduces improvements to model feedback and retraining, allowing your AI-powered anomaly detection to learn directly from your input and become more accurate over time.

In this blog post, we'll cover how the updated model feedback works. See the changelog for all details of the 7.0 release.